In this video I talk about the usage of Houdini as scene assembler. This topic will be recurrent in future posts, as Houdini is becoming a very popular tool for look-dev, lighting, rendering and layout, among others.

In this case I go trhough bundles, takes and rops and how we use them while lighting shots in visual effects projects.

You will learn:

- Bundles, takes, rops

- Alembic import

- Different ways of assign materials

- Create look-dev collections

- Generate .ass files

- Create render layers

- Create quick slap comps

- Override materials

Check it out here.

lighting

Katana, constraint lights to an alembic geometry /

One of the most common situations while lighting a shot is attaching a CG light in your scene assembler to an alemic cache exported from Maya. This is very simple to do in Katana, let’s have a look at it.

I’m using this simple animation of a car spining around.

In most cases you need an object within the alembic cache that has the animation baked into it. The usual approach is to use a locator. To do so, snap it onto one of the lights geometry of the car and parent constrain it to the master control of the car. Then bake the animation of the locator and export it with the rest of the alembic cache to Katana.

In Katana, create a gafferThree node but do not place any lights yet. It is better to do the constraints first, if not you might have to deal with offset issues later on.

Use a parentChildConstraint node indicating the gaffer node in the basePath and the locator of the car in the target.

Now place both headlights according with the model of the car. If you press play they should follow the animation of the car perfectly.

In case you forget to do the parentConstraint before adding lights to the gaffer, you might have to control the offset and compensate for it. To actually see the values you can add constraintResolve and a transformEdit to check the transformations.

Katana fastrack promo 01 /

I’m working on a new training series called “Katana fastrack”. Here is the first promotional video.

Stay tuned it will be available later this year.

Custom attributes in Clarisse /

Using custom attributes to establish different looks is something that we have to deal with in many shots. In this short video I show you how to work with custome attributes between Maya and Clarisse.

Introduction to Gaffer 04 /

Introduction to Gaffer part 04 where I talk mostly about volumes. I also mention a few things about good practices while look-deving “fetchin” textures and what not.

Houdini as scene assembler part 03 /

In this post I will talk about using texture bitmaps and subdivision surfaces.

I have a material network with a couple of shaders, one for the body of this character and another one for the rest. If using Arnold I would have a shop network.

To bring texture bitmaps I use texture nodes when working with Mantra and image nodes when working with Arnold. The principled shader has tabs with inputs for textures. I rarely use these, I always create nodes to take care of the texturing. At the end of the day I never use only one texture per channel. More of this in future posts.

In Mantra, textures are multiplied by the albedo color. Be careful with this.

With Mantra, this is the UDIM tag textureName.%(UDIM)d.exr with arnold textureName.<UDIM>.exr

There is a triplanar node that can be used with Arnold and a different one called UV triplanar projection for Mantra. I don’t usually work without UVs, but these nodes can be useful when working with terrains or other large surfaces.

To subdivide geometry, at object level you can just go to the Arnold tab and select the type of subdivision and the amount. If you need to subdivide only a few parts of you alembic asset, create an unpack node (transfer attributes and groups) and then a subdivide node. This works with both Mantra and Arnold, although there is a better way of doing this with Arnold. We will talk about it in the future.

Houdini as scene assembler part 02 /

In the previous post I showed you how to load alembic caches using the file node and then change the viewport visualization to bounding box. This is good enough if you are let's say look-deving a character. If you want to load a heavy alembic cache, like a very detailed city with a lot of buildings, or a huge spaceship, you might want to use a different approach.

Instead of using a file node, it is better to use the alembic node to load you assets, and then set the option Load As: alembic delayed load primitives, and display as bounding box. This isn't actually loading the geometry in memory and it will be way more efficient down the line.

In this post I'm just talking about shading assignment in Houdini.

The easiest and more simple way to assign shaders is by selecting the asset node in the /obj context and assign a shader in the render tab. Your Mantra shaders should be placed in the /mat context and your Arnold shaders in the /shop context as /mat is not fully supported yet.

In the /mat context you can just go and create a Mantra Principled Shader. For Arnold, it is better to create an arnold shader network and then any arnold shader inside connected to the surface input.

Houdini doesn't have an isolation mode for shading components like Maya (as far as I know) but you can drag and drop shaders and textures onto the viewport or IPR while look-deving. This only works in the /mat context (again, as far as I know).

Another way of assigning shaders is creating material nodes inside of the alembic node. This material nodes can be assigned to different parts of your asset using wildcards. To assign multiple materials you can create different tabs in the material node or you can just concatenate material nodes (which I prefer). This technique works with both Mantra and Arnold.

You will find yourself most of the time creating material networks (Mantra) or shop networks (arnold) containing all the shaders of your asset. In a lighting shot you will end up with different subnetworks for each asset on the shot.

This subnetworks of shaders can be place at the /obj level or inside of the alembics containing your assets.

Another clever way of assignment shaders is using the data tree -> object appearance. This only works at object level. If you want to go deeper in your alembic asset, you need to add first a node called packed edit. Then in the data tree you will have access to all the different parts of your asset.

There is another way of controlling looks in Houdini, and that is using the material style sheets. We will cover this tool in future posts.

A bit more of gaffer /

Keep playing with gaffer and keep discovering how to do stuff that I’m used to do in other software.

Introduction to gaffer /

By gaffer hq: Gaffer is a free, open-source, node-based VFX application that enables look developers, lighters, and compositors to easily build, tweak, iterate, and render scenes. Built with flexibility in mind, Gaffer supports in-application scripting in Python and OSL, so VFX artists and technical directors can design shaders, automate processes, and build production workflows.

With hooks in both C++ and Python, Gaffer's readily extensible API provides both professional studios and enthusiasts with the tools to add their own custom modules, nodes, and UI.

The workhorse of the production pipeline at Image Engine Design Inc., Gaffer has been used to build award-winning VFX for shows such as Jurassic World: Fallen Kingdom, Lost in Space, Logan, and Game of Thrones.

Ricoh Theta for image acquisition in VFX /

This is a very quick overview of how I use my tiny Ricoh Theta for lighting acquisition in VFX. I always use one of my two traditional setups for capturing HDRI and bracketed textures but on top of that, I use a Theta as backup. Sometimes if I don't have enough room on-set I might only use a Theta, but this is not ideal.

There is no way to manually control this camera, shame! But using an iPhone app like Simple HDR at least you can do bracketing. Still can't control it, but it is something.

As always capturing any camera data, you will need a Macbeth chart.

For HDRI acquisition it is always extremely important to have good references for you lighting distribution, density, temperature, reflection and shadow. Spheres are a must.

For this particular exercise I'm using a Mini Manfrotto tripod to place my camera above 50cm from the ground aprox.

This is the equitectangular map that I got after merging 7 brackets generated automatically with the Theta. There are 2 major disadvantages if you compare this panorama with the ones you typically get using a traditional DSLR + fisheye setup.

- Poor resolution, artefacts and aberrations

- Poor dynamic range

I use HDR merge pro in Photoshop to merge my brackets. It is very fast and it actually works. But never use Photoshop to work with data images.

Once the panorama has been stitched, move to Nuke to neutralise it.

Start by neutralising the plate.

Linearization first, followed by white balance.

Copy the grading from the plate to the panorama.

Save the maps, go to Maya and create an IBL setup.

The dynamic range in the panorama is very low compared with what we would have if were using a traditional DSLR setup. This means that our key light is not going to work very well I'm afraid.

If we compare the CG against the plate, we can easily see that the sun is not working at all.

The best way to fix this issue at this point is going back to Nuke and remove the sun from the panorama. Then crop it and save it as a HDR texture to be mapped in a CG light.

Map the HDR texture to a area light in Maya and place it accordingly.

Now we should be able to match the key light much better.

Final render.

Quick and dirty free IBLs /

Some of my spare IBLs that I shot while ago using a Ricoh Theta. They contain around 12EV dynamic range. Resolution is not pretty good but it stills holds up for look-dev and lighting tasks.

Feel free to download the equirectangular .exrs here.

Please do not use in commercial projects.

Cafe in Barcelona.

Cafe in Barcelona render test.

Hobo hotel.

Hobo hotel render test.

Campus i12 green room.

Campus i12 green room render test.

Campus i12 class.

Campus i12 class render test.

Chiswick Gardens.

Chiswick Gardens render test.

Hard light / soft light / specular light / diffuse light /

These days we are lucky enough to apply the same photographic and cinematographic principles to our work as visual effects artists lighting shots. That's why we are always talking about cinematography and cinematic language. Today we are going to talk about some very common techniques in the cinematography world: hard light, soft light, specular light and diffuse light.

The main difference between hard light and soft light do not eradicate in the light itself but in the shadows. When the shadow is perfectly defined and opaque we talk about hard light. When the shadows are diffuse we called it soft lighting, the shadows will also be less opaque.

Is there any specific lighting source that creates hard or soft lighting? The answer is no. Any light can create hard or soft lighting depending on two factors.

- Size: Not only the size of the practical lighting source but also the size in relationship with the subject that is being illuminated.

- Distance: In relation to the subject and the placement of the lighting source.

Diffraction refers to various phenomena that occur when a wave encounters an obstacle or a slit. It is defined as the bending of light around the corners of an obstacle or aperture into the region of geometrical shadow of the obstacle.

When a light beam impacts on the surface of an object, if the size of the lighting source is similar to the size of the object, the light beam will go parallel and get slightly curved towards the interior.

If the size of the lighting source is smaller than the object or it is placed far away from it, the light beam won't bend creating very hard and defined shadows.

If the lighting source is bigger than the subject and it's placed near of it, the light beam will get curved a lot generating soft shadows.

If the lighting source is way bigger than the subject and it's place near of it, the light beam will be curved a lot, even they will get mixed at some point. Consequently the profile of the subject will not be represented in the shadows.

If a big lighting source is placed very far of the subject, its size will be altered in relation with the subject, and its behavior will be the same as a small lighting source, generating hard shadows. The most common example of this is the sun. It is very far but still generates hard lighting. Only on cloudy days the sun lights gets diffused by the clouds.

In two lines

- Soft light: Big lighting sources and or close to the subject.

- Hard light: Small lighting sources and or far from the subject.

Specular light: Lighting source very powerful in the center that gradually loses energy toward its extremes. Like a traditional torch. It generates very exposed and bright areas in the subject. Like the lights used in photo calls and interviews.

Diffuse light: Lighting source with uniform energy all over its surface. The lighting tends to be more compensated when it hits the subject surface.

Diffuse light and soft light are not the same. When we talk about soft lighting we are talking about soft shadows. When we mention diffuse light we are talking about the distribution of the light, equally distributed along its surface.

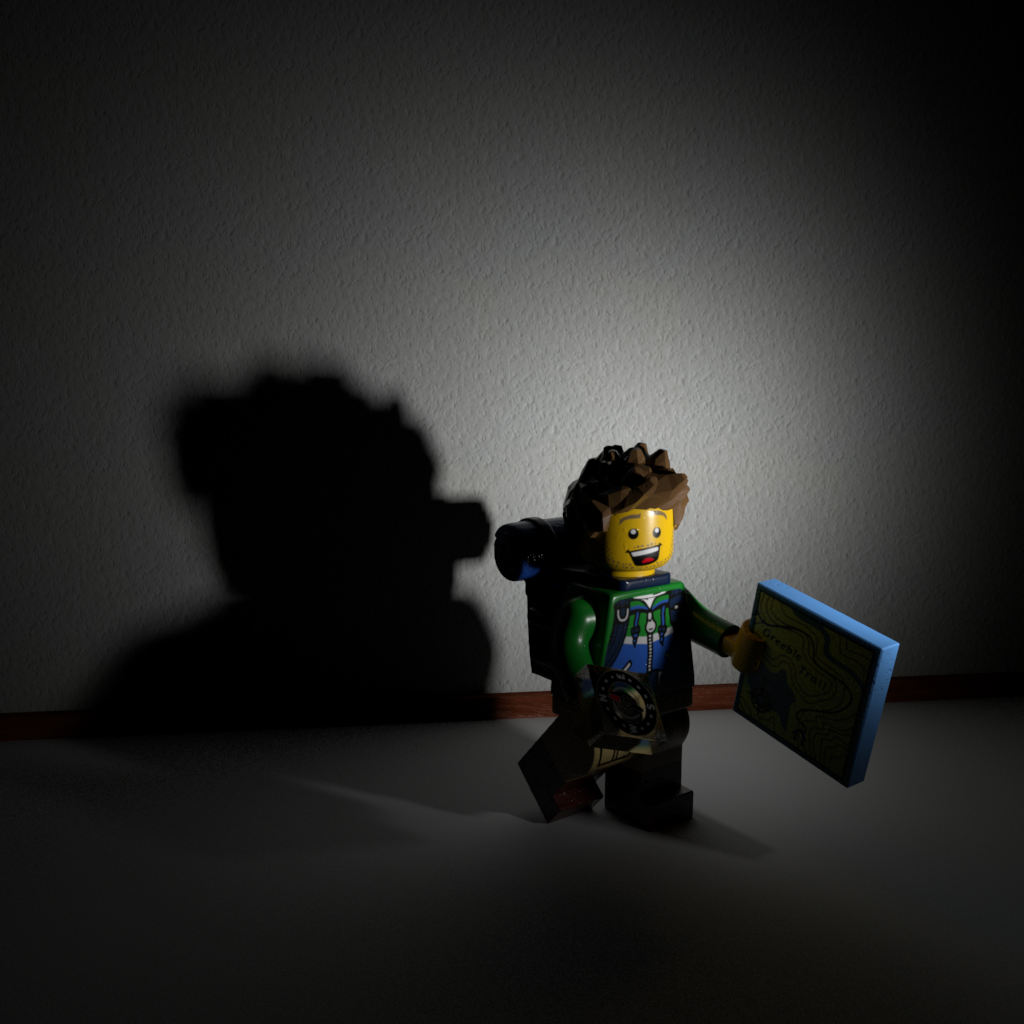

Some 3D samples with Legos.

- Here the character is being lit by a small lighting source, smaller than the character itself and placed far from the subject. We get hard light, hard shadows.

- Here we have a bigger lighting source, pretty much same size as the character and placed close to it. We get soft lighting, soft shadows.

- This is a big lighting source, much bigger than the subject. We now get extra soft lighting, losing the shape of the shadows.

- Now the character is being lit by the sun. The sun is a huge lighting source but being placed far far away from the subject it behaves like a small lighting source generating hard light.

- Finally there is another example of very hard light caused by the flash of the camera, another very powerful and concentrated point of light placed very close to the subject. You can get this in 3D reducing a lot the spread value of the light.

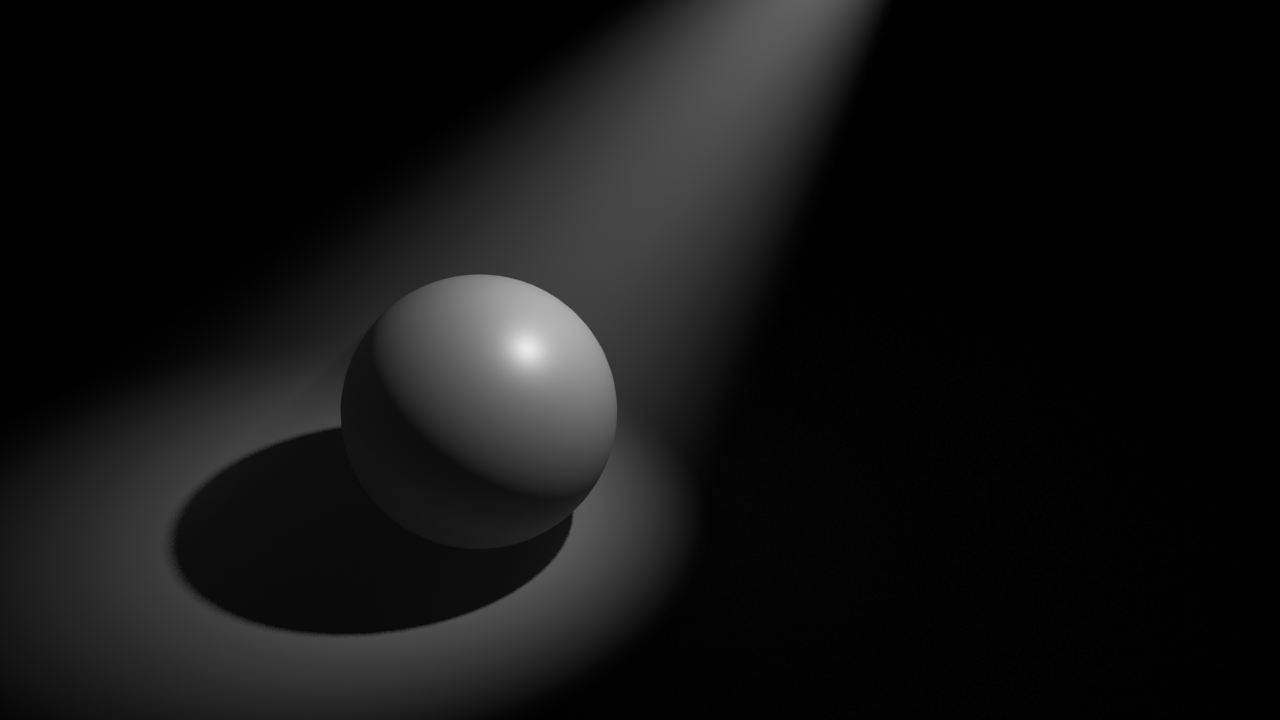

- Now a couple of images for specular and diffuse light.

Central Saint Martins free HDRI /

I shot another HDRI for Akromatic that you can download for free for your look-dev and lighting tests.

Check it out at the Akromatic website.

Environment reconstruction + HDR projections /

I've been working on the reconstruction of this fancy environment in Hackney Wick, East London.

The idea behind this exercise was recreating the environment in terms of shape and volume, and then project HDRIs on the geometry. Doing this we can get more accurate lighting contribution, occlusion, reflections and color bleeding. Much better environment interaction between 3D assets. Which basically means better integrations for our VFX shots.

I tried to make it as simple as possible, spending just a couple of hours on location.

- The first thing I did was drawing some diagrams of the environment and using a laser measurer cover the whole place writing down all the information needed for later when working on the virtual reconstruction.

- Then I did a quick map of the environment in Photoshop with all the relevant information. Just to keep all my annotations clean and tidy.

- With drawings and annotations would have been good enough for this environment, just because it's quite simple. But in order to make it better I decided to scan the whole place. Lidar scanning is probably the best solution for this, but I decided to do it using photogrammetry. I know it takes more time but you will get textures at the same time. Not only texture placeholders, but true HDR textures that I can use later for projections.

- I took around 500 images of the whole environment and ended up with a very dense point cloud. Just perfect for geometry reconstruction.

- For the photogrammetry process I took around 500 shots. Every single one composed of 3 bracketed exposures, 3 stops apart. This will give me a good dynamic range for this particular environment.

- Combined the 3 brackets to create rectilinear HDR images. Then exported them as both HDR and LDR. The exr HDRs will be used for texturing and the jpg LDR for photogrammetry purpose.

- Also did a few equirectangular HDRIs with even higher dynamic ranger. Then I projected these in Mari using the environment projection feature. Once I completed the projections from different tripod positions, cover the remaining areas with the rectilinear HDRs.

- These are the five different HDRI positions and some render tests.

- The next step is to create a proxy version of the environment. Having the 3D scan this so simple to do, and the final geometry will be very accurate because it's based on photos of the real environment. You could also do a very high detail model but in this case the proxy version was good enough for what I needed.

- Then, high resolution UV mapping is required to get good texture resolution. Every single one of my photos is 6000x4000 pixels. The idea is to project some of them (we don't need all of them) through the photogrammetry cameras. This means great texture resolution if the UVs are good. We could even create full 3D shots and the resolution would hold up.

- After that, I imported in Mari a few cameras exported from Photoscan and the correspondent rectilinear HDR images. Applied same lens distortion to them and project them in Mari and/or Nuke through the cameras. Always keeping the dynamic range.

- Finally exported all the UDIMs to Maya (around 70). All of them 16 bit images with the original dynamic range required for 3D lighting.

- After mipmapped them I did some render tests in Arnold and everything worked as expected. I can play with the exposure and get great lighting information from the walls, floor and ceiling. Did a few render tests with this old character.

mmColorTarget /

This is a very quick demo of how to install on Mac and use the gizmo mmColorTarget or at least how I use it for my texturing/references and lighting process. The gizmo itself was created by Marco Meyer.

IBL and sampling in Clarisse /

Using IBLs with huge ranges for natural light (sun) is just great. They give you a very consistent lighting conditions and the behaviour of the shadows is fantastic.

But sampling those massive values can be a bit tricky sometimes. Your render will have a lot of noise and artifacts, and you will have to deal with tricks like creating cropped versions of the HDRIs or clampling values out of Nuke.

Fortunately in Clarisse we can deal with this issue quite easily.

Shading, lighting and anti-aliasing are completely independent in Clarisse. You can tweak on of them without affecting the other ones saving a lot of rendering time. In many renderers shading sampling is multiplied by anti-aliasing sampling which force the users to tweak all the shaders in order to have decent render times.

- We are going to start with this noisy scene.

- The first thing you should do is changing the Interpolation Mode to

MipMapping in the Map File of your HDRI.

- Then we need to tweak the shading sampling.

- Go to raytracer and activate previz mode. This will remove lighting

information from the scene. All the noise here comes from the shaders.

- In this case we get a lot of noise from the sphere. Just go to the sphere's material and increase the reflection quality under sampling.

- I increased the reflection quality to 10 and can't see any noise in the scene any more.

- Select again the raytracer and deactivate the previz mode. All the noise here is coming now from lighting.

- Go to the gi monte carlo and disable affect diffuse. Doing this gi won't affect lighting. We have now only direct lighting here. If you see some noise just increase the sampling of our direct lights.

- Go to the gi monte carlo and re-enable affect diffuse. Increase the quality until the noise disappears.

- The render is noise free now but it still looks a bit low res, this is because of the anti-aliasing. Go to raytracer and increase the samples. Now the render looks just perfect.

- Finally there is a global sampling setting that usually you won't have to play with. But just for your information, the shading oversampling set to 100% will multiply the shading rays by the anti-aliasing samples, like most of the render engines out there. This will help to refine the render but rendering times will increase quite a bit.

- Now if you want to have quick and dirt results for look-dev or lighting just play with the image quality. You will not get pristine renders but they will be good enough for establishing looks.

Image Based Lighting in Clarisse /

I've been using Isotropix Clarisse in production for a little while now. Recently the VFX Facility where I work announced the usage of Clarisse as primary Look-Dev and Lighting tool, so I decided to start talking about this powerful raytracer on my blog.

Today I'm writing about how to set-up Image Based Lighting.

- We can start by creating a new context called ibl. We will put all the elements needed for ibl inside this context.

- Now we need to create a sphere to use as "world" for the scene.

- This sphere will be the support for the equirectangular HDRI texture.

- I just increased the radius a lot. Keep in mind that this sphere will be covering all your assets inside of it.

- In the image view tab we can see the render in real time.

- Right now the sphere is lit by the default directional light.

- Delete that light.

- Create a new matte material. This material won't be affected by lighting.

- Assign it to the sphere.

- Once assigned the sphere will look black.

- Create an image to load the HDRI texture.

- Connect the texture to the color input of the matte shader.

- Select the desired HDRI map in the texture path.

- Change the projection type to "parametric".

- HDRI textures are usually 32bit linear images. So you need to indicate this in the texture properties.

- I created two spheres to check the lighting. Just press "f" to fit them in the viewport.

- I also created two standard materials, one for each sphere. I'm creating lighting checkers here.

- And a plane, just to check the shadows.

- If I go back to the image view, I can see that the HDRI is already affecting the spheres.

- Right now, only the secondary rays are being affected, like the reflection.

- In order to create proper lighting, we need to use a light called "gi_monte_carlo".

- Right now the noise in the scene is insane. This is because all the crazy detail in the HDRI map.

- First thing to reduce noise would be to change the interpolation of the texture to Mipmapping.

- To have a noise free image we will have to increase the sampling quality of the "gi_monte_carlo" light.

- Noise reduction can be also managed with the anti aliasing sampling of the raytracer.

- The most common approach is to combine raytracer sampling, lighting sampling and shading sampling.

- Around 8 raytracing samples and something around 12 lighting samples are common settings in production.

- There is another method to do IBL in Clarisse without the cost of GI.

- Delete the "gi_monte_carlo" light.

- Create an "ambient_occlusion" light.

- Connect the HDRI texture to the color input.

- In the render only the secondary rays are affected.

- Select the environment sphere and deactivate the "cast shadows" option.

- Now everything works fine.

- To clean the noise increase the sampling of the "ambient_occlusion" light.

- This is a cheaper IBL method.

Normalize textures in Softimage /

Just a quick video tutorial where I talk about my process to normalize textures in Softimage. Spanish audio.

Do you like to see my tutorials in English? Send me a line.

Cheers.

Love Vray's IBL /

When you work for a big VFX or animation studio you usually light your shots with different complex light rigs, often developed by highly talented people.

But when you are working at home or for small studios or doing freelance tasks or whatever else.. you need to simplify your techniques and tray to reach the best quality as you can.

For those reasons, I have to say that I’m switching from Mental Ray to V-Ray.

One of the features that I most love about V-Ray is the awesome dome light to create image based lighting setups.

Let me tell you a couple of thing which make that dome light so great.

- First of all, the technical setup is incredible simple. Just a few clicks, activate linear workflow, correct the gamma of your textures and choose a nice hdri image.

- Is kind of quick and simple to reduce the noise generated by the hdri image. Increasing the maximum subdivisions and decreasing the threshold should be enough. Something between 25 to 50 or 100 as max. subdivision should work on common situations. And something like 0.005 is a good value for the threshold.

- The render time is so fast using raytracing stuff.

- Even using global illumination the render times are more than good.

- Displacement, motion blur and that kind of heavy stuff is also welcome.

- Another thing that I love about the dome light using hdri images is the great quality of the shadows. Usually you don’t need to add direct lights to the scene. If the hdri is good enough you can match the footage really fast and accurately enough.

- The dome light has some parameters to control de orientation of your hdri image and is quite simple to have a nice preview in the Maya’s viewport.

- In all the renders that you can see here, you probably realized that I’m using an hdri image with “a lot” of different lighting points, around 12 different lights on the picture. In this example I put a black color on the background and I changed all the lights by white spots. It is a good test to make a better idea of how the dome light treats the direct lighting. And it is great.

- The natural light is soft and nice.

- These are some of the key point because I love the VRay’s dome light :)

- On the other hand, I don’t like doing look-dev with the dome light. Is really really slow, I can’t recommend this light for that kind of tasks.

- The trick is to turn off your dome light, and create a traditional IBL setup using a sphere and direct lights, or pluging your hdri image to the VRay’s environment and turn on the global illumination.

- Work there on your shaders and then move on to the dome light again.

My favourite V-Ray passes /

Recently working with V-Ray I discovered that these are the render passes which I use more often.

Simple scene, simple asset, simple texture and shading and simple lighting, just to show my render passes and pre-compositing stuff.

- Global Illumination

- Direct lighting

- Normals

- Reflection

- Specular

- Z-Depth

- Occlusion

- Snow (or up/down)

- Uvs

- XYZ (or global position)

RGB

GI

Direct lighting

Normals

Occlusion

Reflection

Snow

Specular

UVs

XYZ global position

Slapcomp