I've been working on the reconstruction of this fancy environment in Hackney Wick, East London.

The idea behind this exercise was recreating the environment in terms of shape and volume, and then project HDRIs on the geometry. Doing this we can get more accurate lighting contribution, occlusion, reflections and color bleeding. Much better environment interaction between 3D assets. Which basically means better integrations for our VFX shots.

I tried to make it as simple as possible, spending just a couple of hours on location.

- The first thing I did was drawing some diagrams of the environment and using a laser measurer cover the whole place writing down all the information needed for later when working on the virtual reconstruction.

- Then I did a quick map of the environment in Photoshop with all the relevant information. Just to keep all my annotations clean and tidy.

- With drawings and annotations would have been good enough for this environment, just because it's quite simple. But in order to make it better I decided to scan the whole place. Lidar scanning is probably the best solution for this, but I decided to do it using photogrammetry. I know it takes more time but you will get textures at the same time. Not only texture placeholders, but true HDR textures that I can use later for projections.

- I took around 500 images of the whole environment and ended up with a very dense point cloud. Just perfect for geometry reconstruction.

- For the photogrammetry process I took around 500 shots. Every single one composed of 3 bracketed exposures, 3 stops apart. This will give me a good dynamic range for this particular environment.

- Combined the 3 brackets to create rectilinear HDR images. Then exported them as both HDR and LDR. The exr HDRs will be used for texturing and the jpg LDR for photogrammetry purpose.

- Also did a few equirectangular HDRIs with even higher dynamic ranger. Then I projected these in Mari using the environment projection feature. Once I completed the projections from different tripod positions, cover the remaining areas with the rectilinear HDRs.

- These are the five different HDRI positions and some render tests.

- The next step is to create a proxy version of the environment. Having the 3D scan this so simple to do, and the final geometry will be very accurate because it's based on photos of the real environment. You could also do a very high detail model but in this case the proxy version was good enough for what I needed.

- Then, high resolution UV mapping is required to get good texture resolution. Every single one of my photos is 6000x4000 pixels. The idea is to project some of them (we don't need all of them) through the photogrammetry cameras. This means great texture resolution if the UVs are good. We could even create full 3D shots and the resolution would hold up.

- After that, I imported in Mari a few cameras exported from Photoscan and the correspondent rectilinear HDR images. Applied same lens distortion to them and project them in Mari and/or Nuke through the cameras. Always keeping the dynamic range.

- Finally exported all the UDIMs to Maya (around 70). All of them 16 bit images with the original dynamic range required for 3D lighting.

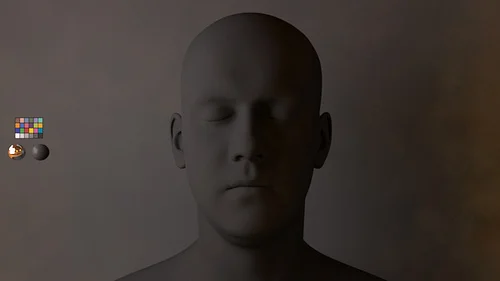

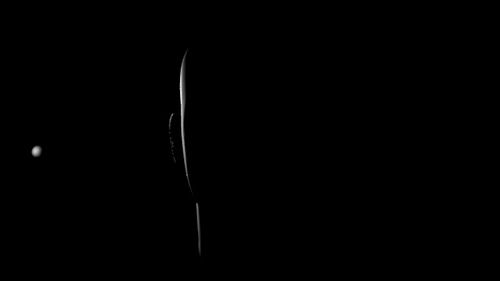

- After mipmapped them I did some render tests in Arnold and everything worked as expected. I can play with the exposure and get great lighting information from the walls, floor and ceiling. Did a few render tests with this old character.